In this digital age, machine learning has become a game-changer, powering applications like image recognition, natural language processing, and recommendation systems. But hey, I get it – the whole machine learning thing can seem pretty daunting at first glance. That’s why in this article, I’ll take you on a lightweight journey into the world of machine learning. Together, we’ll break down the core principles, algorithms, and key terms in a casual and beginner-friendly way. No jargon, no fuss. By the end, you’ll have a solid grasp of the basics and a newfound appreciation for the incredible possibilities of machine learning in shaping our tech-driven future. So, whether you’re just curious or a tech pro looking to expand your horizons, let’s jump into this exciting world of machine learning!

WARNING: A LOT OF INFORMATION!

Why Do We Need Machines to Learn?

You might be wondering, why do we need machines to learn in the first place? Can’t we just rely on human intelligence to tackle complex problems? Well, the answer lies in the sheer scale and complexity of data that we encounter in our modern lives.

As technology continues to advance, we are generating and collecting an unprecedented amount of data every second. From social media interactions to sensor readings, and from online shopping habits to medical records, this data holds invaluable insights that can revolutionize how we live, work, and interact with the world around us.

Here’s the kicker – the traditional methods of programming and rule-based systems struggle to handle such vast and diverse data sets. Writing explicit instructions for every possible scenario becomes impractical, if not impossible. That’s where machine learning swoops in to save the day!

Machine learning empowers computers to learn from data, identify patterns, and make decisions without being explicitly programmed for each scenario. Instead of relying on rigid rules, machine learning algorithms adapt and improve their performance over time as they encounter more data. It’s like teaching a computer to learn from examples and experiences, much like how we, humans, learn from our own experiences.

The ability to learn from data and adapt to changing circumstances makes machine learning an incredibly powerful tool across various industries. Whether it’s personalizing recommendations on streaming platforms, optimizing supply chain logistics, diagnosing diseases with greater accuracy, or even enabling self-driving cars, machine learning has become the backbone of many cutting-edge applications.

Moreover, machines can process vast amounts of data much faster than any human brain could ever dream of. By leveraging machine learning, we can extract valuable insights from data at lightning speed, enabling us to make more informed decisions and drive innovation like never before.

So, in essence, we need machines to learn because they allow us to tackle complex problems, uncover hidden patterns in massive datasets, and create intelligent systems that can make our lives easier and more efficient. In the following sections, we’ll delve deeper into the key concepts and types of machine learning algorithms, so you can better understand how these remarkable systems work their magic. Let’s dive in!

What is Learning?

In the context of machine learning, the term “learning” refers to the ability of a computer system to improve its performance on a specific task through the acquisition of knowledge or experience from data. Tom M. Mitchell, a renowned computer scientist, provides a concise and insightful definition of machine learning:

“Machine learning is the study of algorithms that can learn from and make predictions or decisions based on data.”

Let’s break down this definition further. At its core, learning in the context of machine learning is all about using data to make predictions or decisions. Instead of explicitly programming a computer to perform a task, we let it learn from examples or past experiences.

Think of it like teaching a computer to recognize different breeds of dogs or cats. Instead of giving it a rigid set of rules like “if the ears are pointed and the tail is fluffy, it’s a husky,” we would feed the computer thousands of labeled images of various dog breeds, allowing it to find patterns and features associated with each breed on its own. As it sees more examples, it refines its ability to distinguish one breed from another, and its predictions become more accurate.

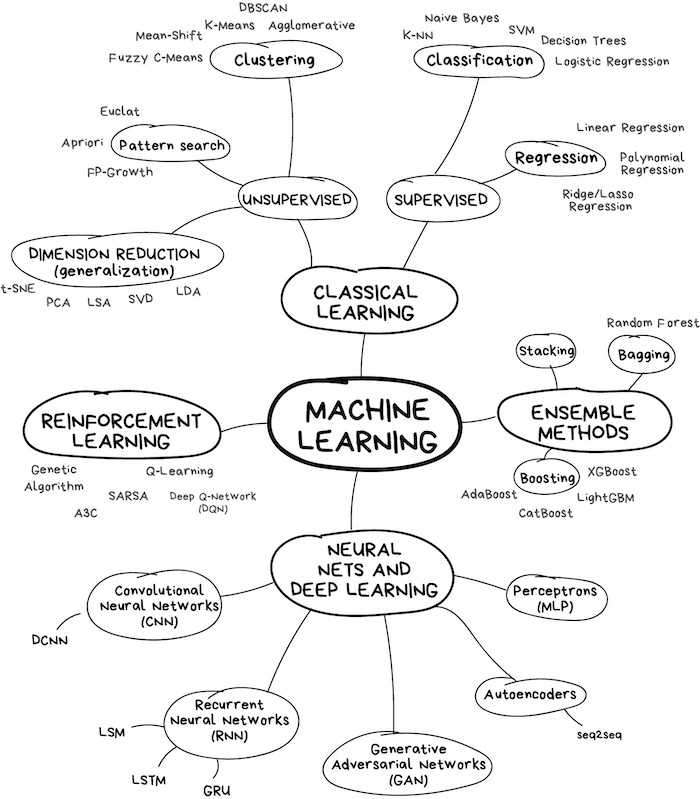

There are different types of machine learning, but the two main categories are:

- Supervised Learning: In this approach, the algorithm is trained on labeled data, meaning the input data is paired with corresponding correct output labels. The algorithm learns from these examples and tries to generalize the mapping from inputs to outputs to make predictions on unseen data. It’s like a teacher guiding a student, providing the right answers so that the student can learn from them.

- Unsupervised Learning: Here, the algorithm works with unlabeled data, and its goal is to find patterns and structure within the data on its own. It tries to identify underlying relationships or groupings in the data without any explicit guidance. This is like a student exploring data without any preconceived notions, trying to uncover meaningful patterns.

In addition to these, there are also other learning paradigms like semi-supervised learning and reinforcement learning, each catering to specific types of problems and data scenarios.

The ability to learn from data and improve performance over time is what sets machine learning apart from traditional programming. Machine learning algorithms can adapt to new information and changing conditions, making them incredibly versatile and valuable in solving a wide range of real-world problems.

In the next sections, we’ll explore some of the common algorithms used in machine learning, providing a glimpse into how these algorithms learn and make predictions based on data.

Main Components of Machine Learning

To better understand how machine learning works, let’s explore its three main components: data, features, and algorithms. These components play a crucial role in the learning process and ultimately determine the success of a machine learning model.

- Data:

Data is the lifeblood of machine learning. It serves as the foundation upon which algorithms learn patterns and make predictions. In supervised learning, data is labeled, meaning it consists of input samples paired with corresponding output labels. For example, in a spam email classifier, each email is a data point, and the label indicates whether it’s spam or not.

In unsupervised learning, data is typically unlabeled, and the algorithm’s task is to discover inherent structures or patterns within the data without explicit guidance. This could involve clustering similar data points together or reducing the dimensionality of the data to reveal meaningful relationships.

Quality and quantity of data significantly impact the performance of a machine learning model. More data generally leads to better generalization and more accurate predictions. However, it’s essential to ensure the data is diverse, representative, and free from biases to avoid producing skewed or unreliable results.

- Features:

Features are the measurable properties or characteristics extracted from the data that serve as inputs to a machine learning model. Choosing the right features is a critical step in the learning process. Effective features should be informative and relevant to the problem at hand, enabling the algorithm to capture meaningful patterns.

Feature engineering is the process of selecting, transforming, or creating new features from raw data to improve the model’s performance. For example, in a natural language processing task, converting words into numerical vectors using techniques like word embeddings allows the algorithm to understand the semantic relationships between words.

The success of a machine learning model often depends on the quality of the features and how well they represent the underlying patterns in the data. Skilful feature engineering can lead to significant improvements in the model’s accuracy and efficiency.

- Algorithms:

Machine learning algorithms are the mathematical engines that power the learning process. They process the input data and learn from it to make predictions or decisions. The choice of algorithm depends on the type of learning problem (supervised, unsupervised, etc.) and the nature of the data.

Supervised learning algorithms, such as Linear Regression, Decision Trees, Random Forests, Support Vector Machines (SVM), and Neural Networks, are used for tasks like classification and regression. These algorithms learn from labeled data and make predictions on new, unseen data.

Unsupervised learning algorithms, including K-Means Clustering, Hierarchical Clustering, and Principal Component Analysis (PCA), focus on finding patterns or structures within unlabeled data.

There are also other specialized algorithms, such as reinforcement learning algorithms for training agents in dynamic environments through trial and error.

Each algorithm has its strengths and weaknesses, and there’s no one-size-fits-all solution. Experimenting with different algorithms and fine-tuning their parameters is common practice in machine learning to achieve the best results for a particular task.

In summary, data, features, and algorithms form the backbone of machine learning. Together, they enable computers to learn from data, identify patterns, and make intelligent decisions without explicit programming. As we proceed with our exploration of machine learning, these components will intertwine, showcasing the magic of this transformative technology in action.

Main Types of Machine Learning

Machine learning encompasses various approaches and techniques to solve different types of problems. Let’s explore the two main types of machine learning: supervised learning and unsupervised learning, along with a brief introduction to other specialized types.

- Supervised Learning:

Supervised learning is the most common type of machine learning. In this approach, the algorithm learns from labeled data, where each input sample is associated with a corresponding output label. The goal is for the algorithm to learn the mapping between the input and output so that it can make accurate predictions on new, unseen data.

Classification and regression are two primary tasks in supervised learning. In classification, the algorithm assigns inputs to predefined categories or classes. For instance, it can classify emails as spam or not spam based on their content. Regression, on the other hand, involves predicting continuous numerical values, such as predicting housing prices based on various features like square footage, location, and number of bedrooms.

Supervised learning algorithms include Linear Regression, Decision Trees, Random Forests, Support Vector Machines (SVM), Naive Bayes, and various types of Neural Networks.

- Unsupervised Learning:

Unsupervised learning deals with unlabeled data, where the algorithm’s objective is to discover patterns, structures, or relationships within the data without explicit guidance. Unlike supervised learning, there are no predefined output labels; instead, the algorithm groups or organizes the data based on similarities or differences.

Clustering and dimensionality reduction are common tasks in unsupervised learning. Clustering algorithms group similar data points together into clusters, allowing us to identify meaningful segments within the data. Dimensionality reduction techniques, like Principal Component Analysis (PCA), reduce the number of features while preserving the most critical information, making it easier to visualize and analyze complex data.

Popular unsupervised learning algorithms include K-Means Clustering, Hierarchical Clustering, Gaussian Mixture Models (GMM), t-distributed Stochastic Neighbor Embedding (t-SNE), and Autoencoders.

- Other Specialized Types:

Beyond supervised and unsupervised learning, there are other specialized types of machine learning tailored to specific scenarios:

- Semi-Supervised Learning: This approach combines labeled and unlabeled data to improve learning performance when labeled data is scarce or expensive to obtain.

- Reinforcement Learning: In reinforcement learning, an agent learns to interact with an environment to achieve specific goals. The agent receives feedback in the form of rewards or penalties, guiding it to improve its actions over time.

- Transfer Learning: Transfer learning leverages knowledge gained from one task or domain to improve performance in a related but different task or domain.

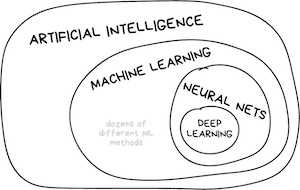

- Deep Learning: Deep learning is a subset of machine learning that employs artificial neural networks with multiple layers to learn complex patterns from large amounts of data. It has revolutionized various fields, such as computer vision, natural language processing, and speech recognition.

Each type of machine learning has its applications and strengths, and choosing the right approach depends on the problem at hand and the available data. As you delve deeper into the realm of machine learning, understanding these different types will help you navigate the vast landscape of possibilities that this exciting field has to offer.

Supervised Learning

Let’s explore some common concepts and techniques within supervised learning:

- Classification:

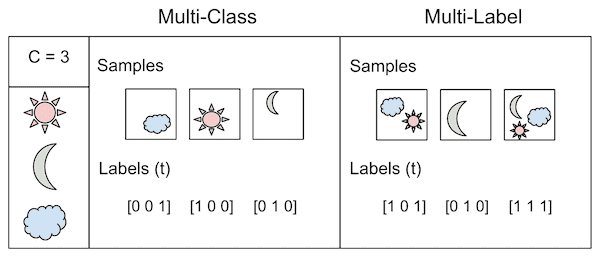

Classification is a task where the algorithm predicts the category or class label of a given input based on its features. There are two main types of classification: binary classification and multi-class classification.

- Binary Classification: In binary classification, the algorithm classifies input samples into one of two possible classes. For example, spam email detection is a binary classification problem where the algorithm decides whether an email is spam or not spam.

- Multi-Class Classification: In multi-class classification, the algorithm assigns inputs to one of several possible classes. Imagine classifying images of animals into categories like “cat,” “dog,” “bird,” and “horse” – this is a multi-class classification task.

Algorithms: For binary classification, Logistic Regression and Support Vector Machines (SVM) are commonly used algorithms. For multi-class classification, algorithms like Decision Trees, Random Forests, and Neural Networks are often employed.

- Multi-Label Classification:

Multi-label classification is an extension of multi-class classification where each input sample can belong to multiple classes simultaneously. For instance, an image can contain both a cat and a dog. Multi-label classification algorithms enable the prediction of multiple labels for a single input.

Algorithms: One popular approach for multi-label classification is the “Binary Relevance” method, where a separate binary classifier is trained for each label independently.

- Logistic Regression:

Logistic Regression is a supervised learning algorithm used for binary classification. Despite its name, logistic regression is primarily used for classification tasks, not regression. It models the probability of an input sample belonging to a particular class, and based on this probability, it assigns the sample to the most likely class.

Algorithms: Logistic Regression (yes, it’s an algorithm name as well as a concept).

- One-vs-All (OvA) and One-vs-One (OvO):

When dealing with multi-class classification problems, we might use one of these strategies to convert it into multiple binary classification tasks:

- One-vs-All (OvA): In OvA, a separate binary classifier is trained for each class against all other classes. For example, in a multi-class problem with classes A, B, and C, OvA would train three classifiers: A vs. {B, C}, B vs. {A, C}, and C vs. {A, B}. During prediction, the class with the highest confidence from these classifiers is chosen.

- One-vs-One (OvO): In OvO, a binary classifier is trained for every pair of classes. For N classes, N(N-1)/2 classifiers are trained. During prediction, each classifier “votes,” and the class with the most votes wins.

OvA and OvO are strategies rather than specific algorithms, and they can be used with various classifiers like SVM, Decision Trees, etc.

- Regression:

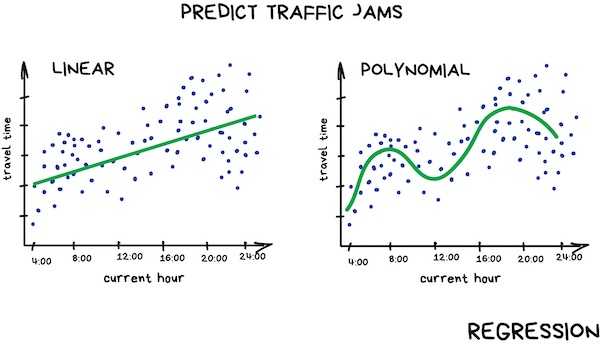

Regression is a supervised learning task where the algorithm predicts continuous numerical values based on input features. There are two common types of regression: linear regression and polynomial regression.

- Linear Regression: Linear regression models the relationship between input features and output as a straight line. It finds the best-fitting line that minimizes the error between predicted values and actual values.

- Polynomial Regression: Polynomial regression extends linear regression by fitting a polynomial curve to the data. It can capture more complex relationships between input and output when a straight line isn’t sufficient.

Algorithms: For linear regression, the algorithm name is Linear Regression. For polynomial regression, the algorithm is Linear Regression with polynomial features or specialized algorithms like Polynomial Regression.

In supervised learning, understanding these concepts and algorithms is fundamental to building effective models for a wide range of applications. By combining these techniques with appropriate feature engineering and data preprocessing, you can develop powerful machine learning systems capable of making accurate predictions and informed decisions.

Unsupervised Learning

Let’s explore some key concepts and algorithms within unsupervised learning:

- Clustering:

Clustering is a common unsupervised learning task where the algorithm groups similar data points together into clusters based on their features. The goal is to identify inherent patterns or subgroups within the data.

Algorithms: Two popular clustering algorithms are:

- K-Means Clustering: K-Means partitions data into K clusters by minimizing the sum of squared distances between data points and their cluster centroids.

- Hierarchical Clustering: Hierarchical clustering builds a tree-like structure of nested clusters, creating a hierarchical representation of the data.

- Dimensionality Reduction:

Dimensionality reduction techniques are used to reduce the number of features while preserving the essential information. This is particularly helpful for visualizing and understanding complex data, as well as speeding up machine learning algorithms.

Algorithms: Principal Component Analysis (PCA) is a widely-used dimensionality reduction technique that transforms data into a new set of uncorrelated variables, called principal components. Other algorithms include t-distributed Stochastic Neighbor Embedding (t-SNE), which is popular for visualizing high-dimensional data in lower dimensions.

- Anomaly Detection:

Anomaly detection, also known as outlier detection, involves identifying data points that deviate significantly from the majority of the data. Anomalies can be indicative of errors, fraud, or rare events.

Algorithms: One common algorithm for anomaly detection is the “Isolation Forest” algorithm, which isolates anomalies by randomly partitioning the data space.

- Density Estimation:

Density estimation techniques aim to model the underlying probability distribution of the data. These models can help understand the likelihood of observing certain data points and support other data analysis tasks.

Algorithms: Gaussian Mixture Models (GMM) are widely used for density estimation. GMM represents the data as a combination of several Gaussian distributions, capturing the inherent structure of the data.

- Autoencoders and GANs:

Autoencoders are a type of neural network used for unsupervised learning. They aim to reconstruct the input data itself, making them useful for tasks like data compression and feature learning.

Generative Adversarial Networks (GANs) are a groundbreaking class of artificial intelligence models that have revolutionized the field of deep learning. Introduced by Ian Goodfellow and his colleagues in 2014, GANs consist of two neural networks, the generator, and the discriminator, engaged in a unique adversarial game. The generator generates synthetic data samples, while the discriminator evaluates whether the samples are real or fake. This process creates a dynamic feedback loop, where the generator strives to produce increasingly realistic data to deceive the discriminator, while the discriminator becomes more adept at distinguishing between real and fake data. Through this intense competition, GANs have demonstrated incredible capabilities in generating remarkably realistic and diverse data, ranging from images and videos to audio and text. GANs have found applications in image synthesis, style transfer, super-resolution, and even in generating human-like conversation in natural language processing tasks. However, GAN training can be challenging due to issues like mode collapse and instability. Despite these challenges, GANs continue to be an active area of research, driving innovation and inspiring new applications across various domains.

Unsupervised learning plays a crucial role in various data-driven applications, enabling us to explore and understand complex datasets without the need for labeled examples. By leveraging these unsupervised learning techniques, we can reveal hidden structures, gain insights, and identify patterns that might not be immediately apparent in the raw data.

As a PhD student, I’ve been diving deep into the fascinating world of Generative Adversarial Networks (GANs) for a couple of years now. But what really got me excited is their potential in the field of medical images! Imagine using GANs to create lifelike medical images, helping doctors with diagnoses and boosting the accuracy of computer-aided diagnosis systems. It’s like unleashing the power of AI to revolutionize healthcare, and I’m thrilled to be a part of this exciting journey!

Neural Networks

Neural networks are the backbone of modern deep learning, and they play a pivotal role in various machine learning tasks. Inspired by the human brain’s structure and functioning, neural networks are composed of interconnected nodes, called neurons, organized in layers. Each neuron processes input data and passes the results to the next layer, with complex patterns emerging as information flows through the network.

The foundation of a neural network lies in its architecture, which can vary depending on the specific task at hand. Some common types of neural networks include:

- Feedforward Neural Networks:

Feedforward neural networks are the simplest form, consisting of an input layer, one or more hidden layers, and an output layer. Information flows only in one direction, from the input layer through the hidden layers to the output layer. These networks are commonly used for tasks like image classification and text analysis.

- Convolutional Neural Networks (CNN):

CNNs are specialized neural networks designed for processing grid-like data, such as images and videos. They leverage convolutional layers to extract spatial features and use pooling layers to reduce dimensionality. CNNs have achieved remarkable success in computer vision tasks, such as image recognition and object detection.

- Recurrent Neural Networks (RNN):

RNNs are well-suited for sequential data, like time series or natural language. They have recurrent connections, allowing information to flow backward, which makes them capable of capturing temporal dependencies. RNNs are widely used in tasks like language modeling, machine translation, and speech recognition.

- Long Short-Term Memory (LSTM):

LSTMs are a type of RNN designed to address the vanishing gradient problem, enabling the network to learn long-term dependencies in sequences. They have found applications in tasks that involve modeling sequences with extended temporal context, such as natural language processing and speech recognition.

- Generative Adversarial Networks (GANs):

As mentioned earlier, GANs are a special type of neural network comprising a generator and a discriminator. They work together in an adversarial game to produce incredibly realistic synthetic data, making them powerful tools for tasks like image generation and data augmentation.

The versatility and power of neural networks have fueled their dominance in the world of artificial intelligence. As we continue to push the boundaries of technology, these networks will undoubtedly play an increasingly vital role in shaping the future of machine learning and AI-driven applications.

AI in Business - Do You Really Need It?

AI has become a buzzword in the business world, promising to revolutionize operations, drive efficiency, and unlock untapped opportunities. As a business owner or decision-maker, you might wonder, “Do I really need AI, or is it just a trendy hype?” Let’s explore the potential benefits and considerations of incorporating AI into your business strategy.

- Improved Decision Making:

AI-powered analytics can process vast amounts of data in real-time, providing valuable insights that can inform strategic decisions. From customer behavior patterns to market trends, AI can help you make more informed choices that align with your business goals.

- Enhanced Customer Experience:

AI-driven chatbots and virtual assistants can deliver personalized customer support 24/7, offering immediate responses to queries and addressing customer needs. By streamlining customer interactions, you can create a more engaging and satisfying experience.

- Optimized Operations:

AI can streamline and automate repetitive tasks, freeing up your team’s time to focus on more complex and creative endeavors. From inventory management to supply chain optimization, AI can improve operational efficiency and reduce costs.

- Predictive Maintenance:

In manufacturing and logistics, AI-enabled predictive maintenance can forecast equipment failures before they occur, minimizing downtime and avoiding costly repairs.

- Data-Driven Marketing:

AI can analyze customer preferences and behaviors, enabling you to deliver targeted marketing campaigns that resonate with your audience. By tailoring your marketing efforts, you can maximize your return on investment.

While AI offers numerous benefits, it’s essential to consider potential challenges and ethical implications. Integrating AI into your business requires thoughtful planning, investment, and expertise. Additionally, data privacy and security must be a top priority to safeguard sensitive information.

Before jumping on the AI bandwagon, evaluate your specific business needs and resources. Small businesses might find it more beneficial to start with simple AI applications, while larger enterprises can explore more advanced solutions. Partnering with AI experts or leveraging AI-as-a-Service platforms can help you implement AI effectively without overwhelming your organization.

In conclusion, AI can be a game-changer for businesses, driving innovation and growth. By carefully assessing your requirements and understanding the implications, you can determine whether AI is the right fit for your business strategy. Embracing AI with a clear vision and commitment can open up a world of possibilities, propelling your business into the future of technology-driven success.

AI in Everyday Life

The rapid advancements in machine learning, fueled by powerful neural networks and innovative algorithms, have brought artificial intelligence (AI) into our everyday lives in ways we might not even realize. From the moment we wake up to the time we go to bed, AI is quietly working behind the scenes, enhancing and simplifying various aspects of our daily routines.

- Personalized Recommendations:

AI-driven recommendation systems are omnipresent in online platforms. Whether it’s streaming services suggesting our next favorite show or e-commerce platforms recommending products tailored to our interests, AI algorithms use data from our past behavior to predict what we might like.

- Virtual Assistants:

Virtual assistants, like Siri, Alexa, and Google Assistant, have become an integral part of our lives. Powered by AI, these virtual helpers can answer questions, set reminders, provide weather updates, and even control smart home devices, making our lives more convenient and efficient.

- Natural Language Processing (NLP):

AI has made significant strides in understanding and processing human language. NLP algorithms enable voice recognition, machine translation, sentiment analysis, and even chatbots that engage in human-like conversations.

- Autonomous Vehicles:

AI and machine learning are at the core of autonomous vehicles, transforming transportation as we know it. Self-driving cars leverage sensors and deep learning algorithms to perceive their environment and navigate safely, potentially revolutionizing road safety and mobility.

- Medical Diagnosis:

In the field of medicine, AI has shown promise in assisting doctors with diagnoses and treatment plans. Deep learning models, including GANs, can generate realistic medical images for training, while neural networks aid in early detection of diseases and personalized treatment recommendations.

- Image and Video Analysis:

AI-powered image and video analysis have enabled impressive applications, from content recognition and object detection in surveillance systems to enhancing image quality and generating lifelike images using GANs.

- Personalized Healthcare:

AI is shaping personalized healthcare by analyzing patient data to identify trends, recommend treatment options, and predict potential health risks. Wearable devices, integrated with AI, can monitor vital signs and alert users to potential health issues.

- Environmental Monitoring:

AI helps track and analyze environmental data to address issues like climate change, air quality monitoring, and wildlife conservation, fostering a greener and more sustainable future.

These examples only scratch the surface of how AI has infiltrated and transformed various aspects of our lives. As AI continues to evolve, its impact will likely expand further, improving and enriching our daily experiences in ways we could have only imagined a few years ago. Embracing AI responsibly and harnessing its potential for the greater good will be key to navigating this exciting new frontier.

Cost of Implementing ML Models and Algorithms

As machine learning continues to gain popularity, researchers and developers are eager to harness its potential to drive innovation and solve complex problems. However, implementing ML models comes with various costs and considerations that can impact the feasibility and success of their projects. Let’s explore some of the key factors that researchers and developers should keep in mind:

- Hardware and Infrastructure:

Training complex ML models often demands significant computational power and memory. Researchers and developers might need to invest in high-performance GPUs or cloud-based services to handle the computational workload efficiently. While cloud solutions offer flexibility and scalability, they can incur ongoing expenses, especially for large-scale projects.

- Data Collection and Preparation:

High-quality and diverse datasets are essential for training accurate ML models. Depending on the project’s requirements, researchers may need to collect data from various sources or curate existing datasets, which can be time-consuming and labor-intensive.

- Model Development and Training:

Designing and training ML models require specialized skills and expertise. Researchers and developers might need to invest in continuous learning and stay updated with the latest advancements in ML to achieve optimal results.

- Hyperparameter Tuning:

Fine-tuning hyperparameters is critical for improving model performance. This process involves experimentation and can be computationally expensive, adding to the overall implementation cost.

- Model Deployment and Maintenance:

Once an ML model is ready, deploying it into a production environment requires careful consideration. Researchers and developers need to ensure seamless integration, scalability, and ongoing maintenance to ensure the model’s long-term success.

- Ethical Considerations:

Implementing ML models also comes with ethical responsibilities. Researchers and developers must be mindful of potential biases in the data and be committed to fairness, transparency, and accountability in their models’ outcomes.

- Resource Constraints:

For individual researchers or small teams with limited resources, implementing complex ML models can be challenging. Open-source libraries and pre-trained models might be useful to get started, but building custom solutions often requires additional investments.

Despite these challenges, the rewards of successfully implementing ML models can be immense. Researchers and developers can drive impactful discoveries, unlock new insights, and create innovative solutions that address real-world problems.

To manage costs effectively, collaboration and resource-sharing within the ML community can be invaluable. Leveraging open-source tools, participating in research partnerships, and attending conferences and workshops can provide valuable support and knowledge exchange.

Ultimately, the decision to invest in ML implementation should be based on a careful assessment of project objectives, available resources, and potential benefits. By approaching ML implementation strategically and understanding the costs involved, researchers and developers can embark on a rewarding journey that propels them into the forefront of AI-driven innovation.

Computing Services for ML Model Development

To facilitate ML model development and training, researchers and developers can take advantage of various computing services offered by cloud platforms and specialized providers. Here are several services that empower users to build and train ML models effectively:

- Cloud Computing Platforms: Cloud giants like Amazon Web Services (AWS), Google Cloud Platform (GCP), and Microsoft Azure offer a comprehensive suite of machine learning services. Researchers and developers can access tools like Amazon SageMaker, Google Cloud AI Platform, and Azure Machine Learning to develop, train, and deploy ML models at scale. These platforms provide managed infrastructure, data storage, and built-in support for popular ML frameworks, streamlining the development process.

- GPU Cloud Services: GPU-accelerated computing is crucial for training deep learning models efficiently. GPU cloud services like Paperspace and FloydHub offer virtual machines equipped with powerful GPUs, enabling researchers to speed up the training process significantly. These services often provide pre-configured environments with popular ML frameworks, easing the setup and configuration for users.

- Managed ML Services: For those seeking a more user-friendly experience, managed ML services like Google Cloud AutoML, Microsoft Azure Cognitive Services, and IBM Watson Studio provide high-level APIs and tools for creating ML models without extensive coding knowledge. These services are designed to democratize AI and make ML accessible to a broader audience.

- OpenAI GPT-3 API: OpenAI’s GPT-3 API allows developers to integrate state-of-the-art natural language processing capabilities into their applications. With GPT-3, researchers and developers can build language models, chatbots, and other language-related applications with remarkable conversational abilities.

- Data Science Platforms: Data science platforms like Databricks and Dataiku provide end-to-end data science solutions, encompassing data preparation, model development, and deployment. These platforms offer collaborative environments and tools to streamline the ML workflow and facilitate team collaboration.

- Kaggle Kernels and Colab Notebooks: Kaggle Kernels and Google Colab are interactive platforms that allow researchers to write and run ML code directly in a browser. With free access to GPU and TPU resources, these platforms are excellent options for experimentation and prototyping ML models.

By leveraging these computing services, researchers and developers can overcome computational limitations, access cutting-edge tools, and accelerate the ML model development process. Whether working on a small research project or a large-scale industrial application, these services cater to a wide range of needs and provide the necessary resources to turn ideas into impactful ML solutions.

ChatGPT and Similar Tech - It Will Not Take Your Job

The emergence of advanced language models, like ChatGPT, has sparked both excitement and concerns about the future of human work. While these models exhibit impressive capabilities in generating human-like text, they are not designed to replace humans but rather to augment and enhance our abilities. Here’s why ChatGPT and similar technologies are not job-stealing machines:

- Assisting Humans, not Replacing Them: ChatGPT and other language models are built to assist and support human tasks. They excel at automating repetitive or time-consuming processes, enabling humans to focus on more complex and creative aspects of their work.

- Augmenting Creativity and Efficiency: Rather than taking over jobs, these models enhance productivity and creativity. For content creators, writers, and researchers, ChatGPT can be a valuable tool to brainstorm ideas, draft content, and accelerate research.

- Limited Context and Understanding: Despite their sophistication, language models like ChatGPT lack true understanding and context like humans possess. They operate based on patterns in data and may produce plausible-sounding responses without genuine comprehension.

- Ethical and Responsible Use: Developers of AI technologies are increasingly aware of ethical considerations. They strive to ensure that language models adhere to responsible use and avoid spreading misinformation or engaging in harmful activities.

- Human Oversight and Feedback: To maintain quality and safety, language models undergo rigorous testing and human review. User feedback helps fine-tune and improve the models continually.

- Co-Existence and Collaboration: The future of AI and humans is not a zero-sum game. Rather than competing, AI technologies can complement human abilities, fostering collaboration and innovation.

- AI as a Creative Partner: ChatGPT can be seen as a creative partner, providing suggestions and insights that humans can refine and expand upon. This collaboration can lead to more dynamic and engaging content.

- Creating New Opportunities: AI technologies create new job opportunities in AI development, data curation, and model training. Additionally, these tools can empower individuals and businesses to innovate in novel ways.

It’s essential to view ChatGPT and similar tech as tools that empower humans rather than adversaries. The key lies in leveraging AI responsibly, embracing its potential while recognizing its limitations. As technology evolves, we must adapt and explore the best ways to integrate AI into our lives, making the most of its benefits while ensuring a positive and sustainable future for humanity. So, fear not! ChatGPT will not be taking your job but working alongside you, making the world a more innovative and exciting place.

Conclusions

In this article, we embarked on a journey through the exciting world of machine learning, exploring key concepts such as supervised and unsupervised learning, GANs, neural networks, and the diverse applications of AI in our everyday lives. From personalized recommendations and virtual assistants to breakthroughs in medical diagnosis and environmental monitoring, AI continues to shape our world in unprecedented ways.

As a PhD student with a passion for GANs and their applications in medical images, I am grateful for the opportunity to share my knowledge and experiences with you. If you’re interested in diving deeper into some of the algorithms or exploring specific topics in more detail, I’m thrilled to offer my expertise. You can support me and my research on Buy Me A Coffee through donations, allowing me to further explore and contribute to the fascinating world of AI and machine learning.

By co-existing with AI technologies like ChatGPT, we open doors to new opportunities and collaborations. We can leverage these models as creative partners, using their suggestions and insights to fuel our own creativity and innovation.

As AI continues to evolve, we should welcome it as a tool that empowers us to push the boundaries of what’s possible. It’s not about competition; it’s about finding ways to work together with AI for a brighter and more exciting future.

So, let’s keep riding the AI wave, shaping a future full of endless possibilities. Your curiosity and support make all the difference! Thanks for joining me on this fantastic journey, and here’s to a tech-savvy and brighter future! 🚀🤖